Top 5 Machine Learning Algorithms

Machine learning drives the biggest AI breakthroughs we see today - from Tesla's self-driving cars to DeepMind's protein-folding discoveries. These amazing results have created huge excitement around the technology. But what is machine learning exactly? How does it work? And does it live up to the hype?

This guide explains key machine learning concepts in simple terms, shows real-world applications, and offers starting points for beginners. Whether you're curious about AI's current revolution or thinking about learning these skills, you'll understand what machine learning actually is and why it matters.

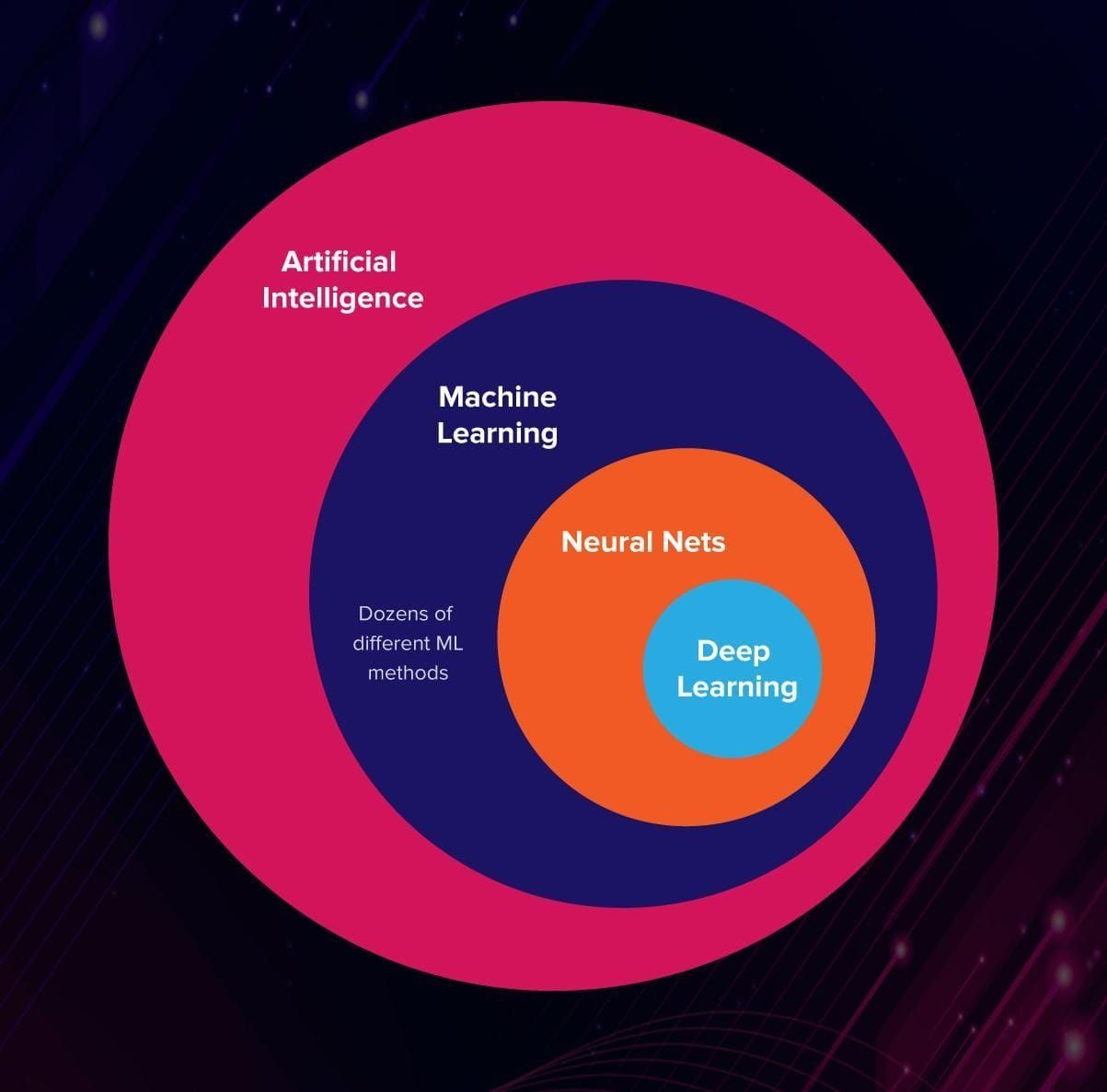

Artificial Intelligence

Andrew Ng, who helped create Google Brain and worked as Baidu's Chief Scientist, describes AI as a "massive toolkit for making computers act smart."

This definition covers a wide range of technology - from basic calculators that follow exact instructions to sophisticated machine learning programs that learn to spot spam by studying millions of examples.

What makes Ng's definition useful is that it shows AI isn't just one thing, but an entire collection of approaches and techniques. Whether a computer follows pre-written rules or learns patterns from data, if it solves problems intelligently, it falls under the AI umbrella.

This broad view explains why AI appears in so many forms, from simple automated systems we use daily to complex learning algorithms that adapt and improve over time.

Machine Learning

Machine learning is a branch of AI where computer programs automatically discover patterns by studying past data. Instead of being told exactly what to do step-by-step, these systems learn on their own and make predictions about new information.

Traditional computer programs, like calculators, follow specific instructions for every situation. This works for simple tasks but becomes impossible with complex, constantly changing problems.

Take email spam filtering as an example. The old-fashioned approach creates rules like "block emails with suspicious subject lines." But this fails because spammers adapt - once they figure out your rules, they change tactics to bypass them.

Machine learning works differently. Instead of rigid rules, you feed the system millions of real spam emails. The program analyzes this data and automatically identifies common "spammy" traits. When new emails arrive, it compares them against these learned patterns to decide if they're spam.

The key difference? Traditional methods break down with new situations, while machine learning systems improve at handling change because they've learned underlying patterns rather than following fixed rules.

Deep Learning

Deep learning is a specialized type of machine learning that handles the most complex AI tasks we see in everyday life. These systems work similarly to how our brains process information and need massive amounts of data to learn effectively.

You'll find deep learning powering voice recognition on your phone, language translation apps, self-driving cars, and other "smart" technologies that seem almost human-like in their abilities.

What makes deep learning special is its ability to tackle problems that require understanding patterns the way humans do - recognizing speech, understanding language, or making split-second driving decisions.

The Different Types of Machine Learning

Now that you understand what machine learning is and how it connects to other AI concepts, let's explore the main types of machine learning algorithms.

Machine learning breaks down into four main categories: supervised, unsupervised, reinforcement, and self-supervised learning. Each type works differently and solves different kinds of problems. Let's look at how each one works and where you'll typically see them used.

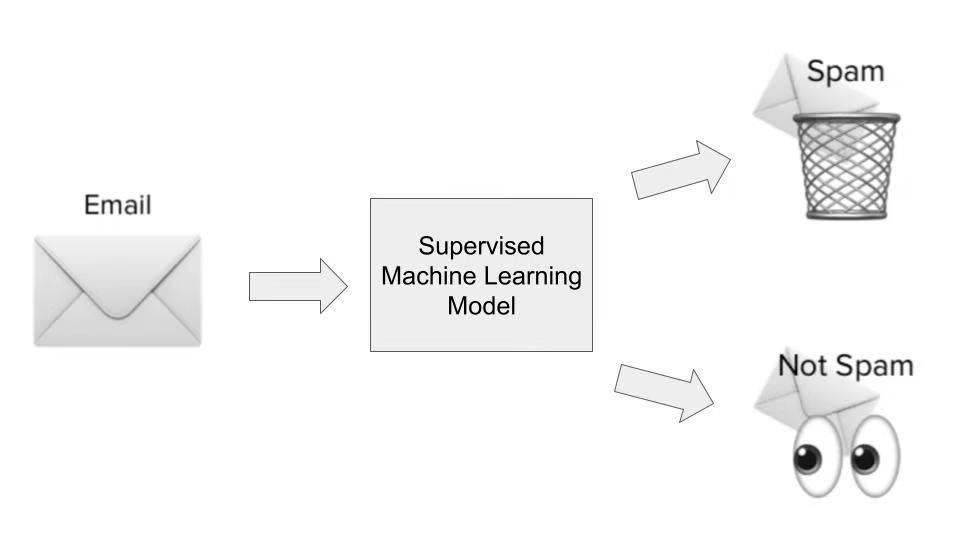

Supervised Machine Learning

Most machine learning works through supervised learning - where algorithms study past examples to make predictions about new situations.

Here's how it works: you show the algorithm both the inputs (the information you have) and the correct outputs (what you want to predict). The system learns the connection between them, then applies this knowledge to new data.

Let's use spam detection as an example. To train a supervised learning system, you'd feed it thousands of emails that are already labeled as "spam" or "not spam."

For each email, the algorithm looks at features like:

- Subject line text

- Sender's email address

- Email content

- Suspicious links

- Other clues that might indicate spam

By studying these labeled examples, the system learns which combinations of features typically mean "spam." Then when a new email arrives, it can analyze the same features and predict whether it's spam or not.

The output is simply "spam" or "not spam." The algorithm studies thousands of these examples and figures out which email features usually lead to which outcome. Once trained, it uses this knowledge to classify new emails it's never seen before.

Supervised learning handles two main types of problems:

- Regression predicts numbers within a range. Think house prices - the algorithm considers square footage, location, bedrooms, and other factors to predict a specific dollar amount.

- Classification sorts things into categories. Our spam detector is classification (spam vs. not spam). Other examples include predicting if customers will cancel their subscriptions or identifying objects in photos.

The key difference? Regression gives you a number, classification gives you a category.

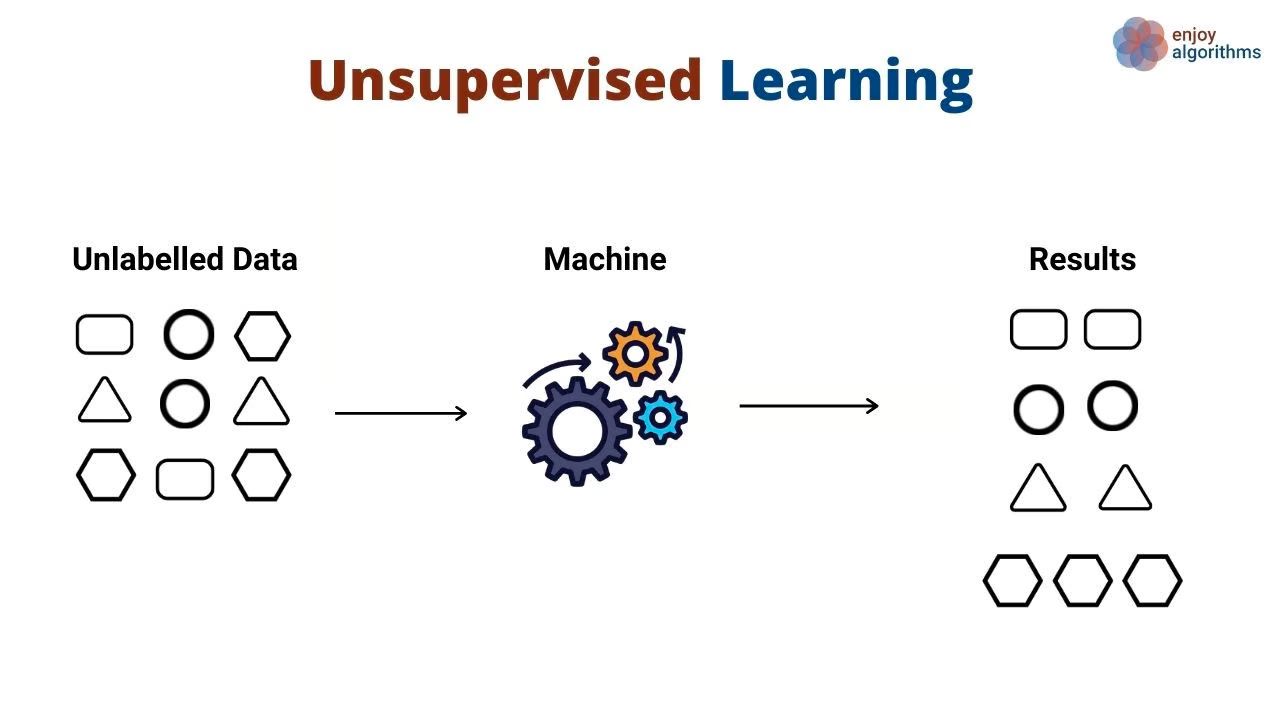

Unsupervised Machine Learning

Unsupervised learning works differently - it finds hidden patterns in data without being told what to look for. Instead of learning from examples with correct answers, these algorithms explore data and discover groupings on their own.

Customer segmentation is a perfect example. Companies know they have different types of customers, but want data to back up their hunches about who these groups are.

An unsupervised algorithm analyzes customer data like:

- How often do they use the product?

- Age, location, and other demographics.

- How they interact with features.

- Purchase patterns.

The system automatically discovers natural customer groups - maybe frequent power users, occasional bargain hunters, or new users still exploring. Once it identifies these segments, it can place new customers into the right group based on their behavior.

The key difference? Nobody tells the algorithm what groups to find - it discovers them naturally from the data patterns.

Unsupervised learning finds hidden patterns without being given examples of right and wrong answers. These algorithms explore data and automatically discover natural groupings.

Customer segmentation shows how this works. Companies know they have different customer types but need data to prove it.

The algorithm studies customer information - usage frequency, demographics, feature interactions, and buying habits. It then discovers groups like power users, bargain hunters, or newcomers exploring the product.

Once trained, it can automatically sort new customers into these segments based on their behavior patterns.

The key point? The system finds these groups on its own - no one tells it what to look for.

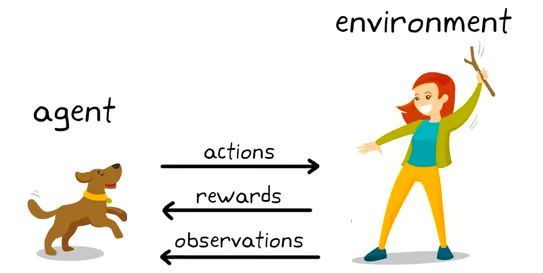

Reinforcement Learning

Reinforcement learning (RL) is a type of machine learning where an agent learns by getting rewards for good actions and penalties for bad ones. Though still largely in the research stage, RL has already powered systems that beat humans in games like Chess and Go.

Think of it like trial and error. The model (called an agent) interacts with an environment (like a chessboard), making decisions by playing different moves.

Each move earns a score—positive if it helps win, negative if it leads to a loss. Over time, the agent learns which actions bring the most rewards and repeats them. This is called exploitation. When it tries new moves to discover better outcomes, it’s in the exploration phase. Together, this process is known as the exploration-exploitation trade-off.

kdnuggets.com

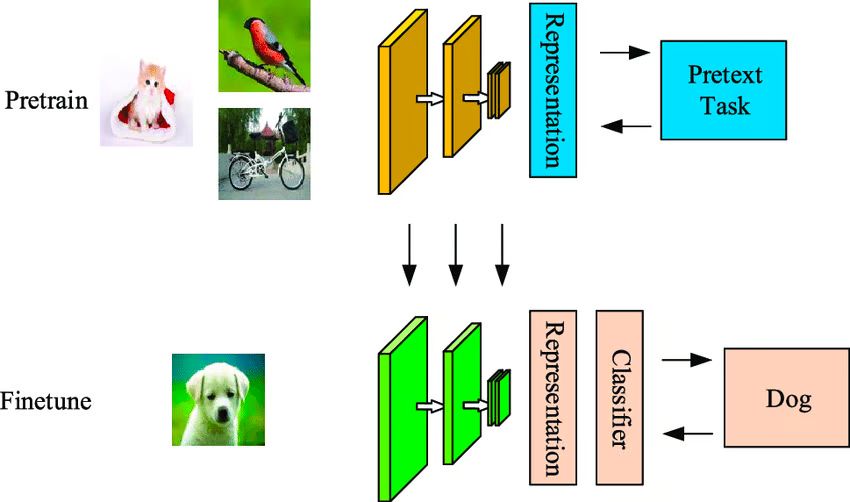

Self-Supervised Machine Learning

Self-supervised learning is a machine learning method that doesn't need labeled data. Instead, the model learns from raw, unlabeled inputs.

For example, a model is first given a bunch of unlabeled images. It groups them into clusters based on patterns it finds. Some images clearly fit into a group (high confidence), while others don’t.

In the next step, the model uses the confidently grouped images as labeled data to train a more accurate classifier, often more effective than using clustering alone.

asset-global

The key difference between self-supervised and supervised learning is that self-supervised models generate their own labels instead of relying on manually labeled data. While they can classify patterns, the output classes may not be linked to real-world objects , unlike in supervised learning, where labels are predefined and meaningful.

Top Most Popular Machine Learning Algorithms

Below, we’ve outlined some of the top machine learning algorithms and their most common use cases.

Top Supervised Machine Learning Algorithms

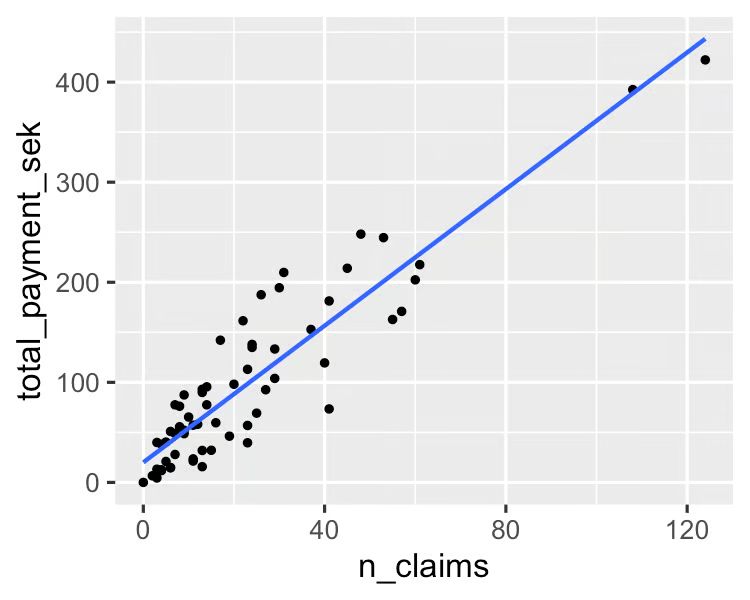

1. Linear Regression: A linear regression algorithm finds a straight-line relationship between input variables and a continuous output. It’s quick to train and easy to understand, making it great for explaining predictions. Common use cases include predicting customer lifetime value, housing prices, and stock trends.

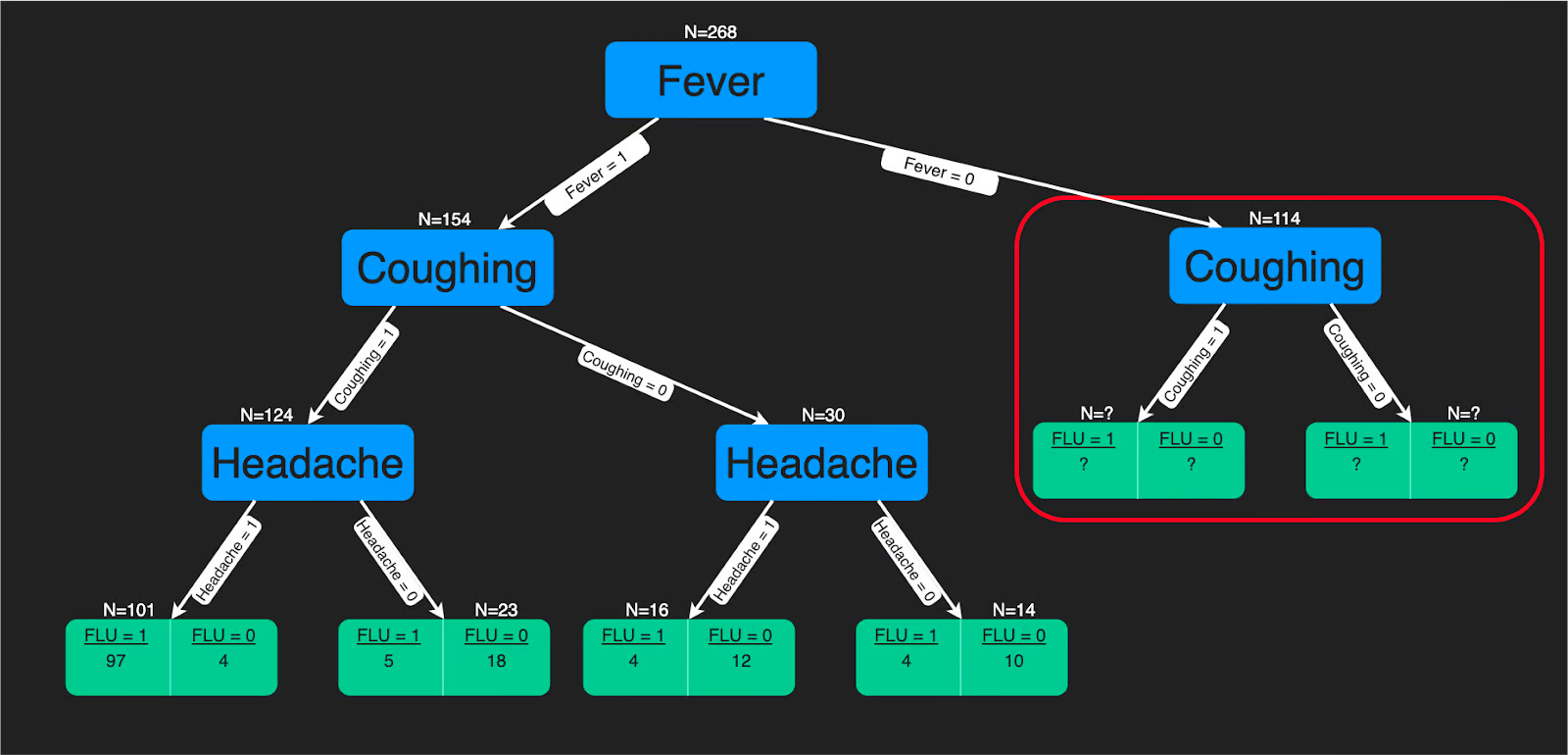

2. Decision Trees: A decision tree is a model that uses a tree-like flow of decision rules based on input features to predict outcomes. It works well for both classification and regression tasks. Its simple, step-by-step logic makes it easy to understand especially useful in fields like healthcare, where clear reasoning behind predictions is important.

ML From Scratch

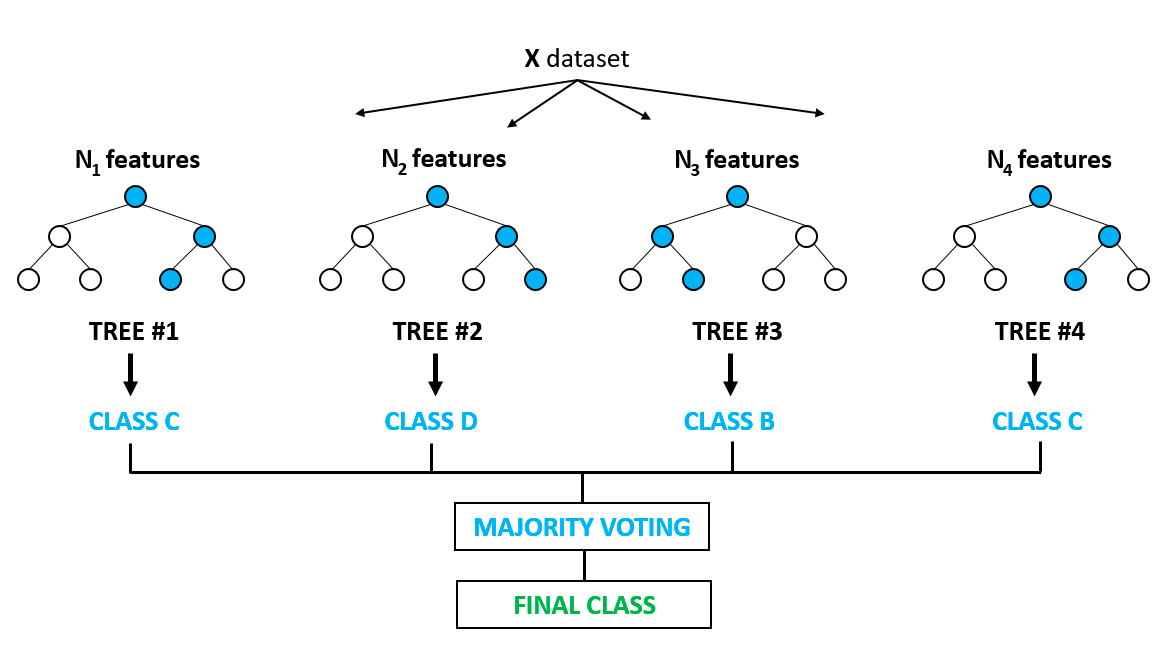

3. Random Forest: Random Forest is a popular algorithm designed to fix the overfitting problem seen in decision trees. Overfitting happens when a model performs well on training data but poorly on new, unseen data.

Random Forest tackles this by creating multiple decision trees using random samples of the data. Each tree gives a prediction, and the final result is based on majority voting, making the model more accurate and reliable.

Miro Medium

Random Forest works for both classification and regression tasks. It’s often used in areas like feature selection and disease detection. You can explore more by diving into tree-based models and ensemble methods, where multiple models are combined for better results.

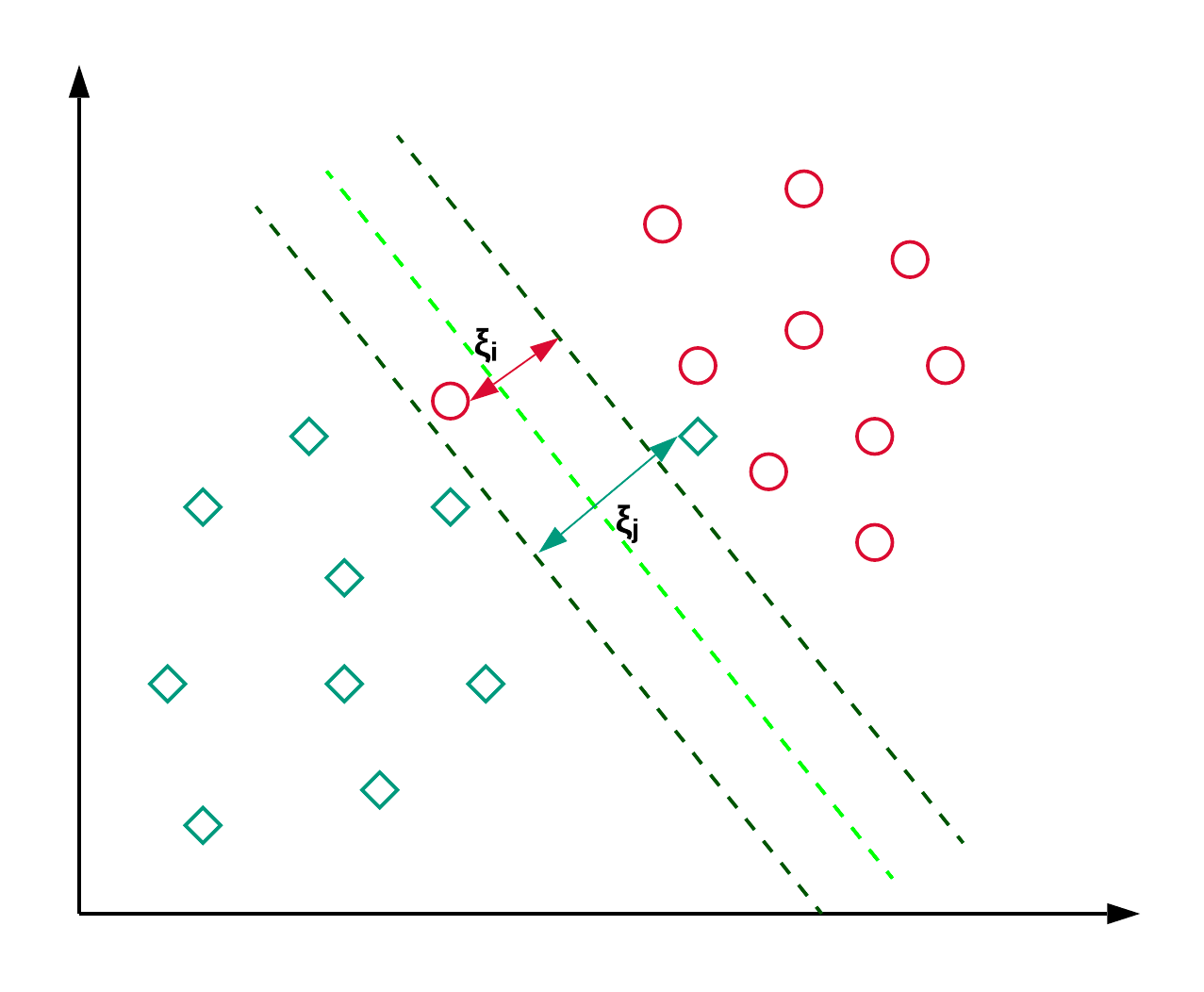

4. Support Vector Machines: Support Vector Machines (SVM) are mainly used for classification tasks. They work by finding the best line or hyperplane—that separates different classes (like red and green dots) while maximizing the distance, or margin, between them for better accuracy.

Micro Medium

While SVM is mainly used for classification, it can also handle regression tasks. Common applications include classifying news articles and recognizing handwriting.

To dive deeper, explore different kernel tricks and check out the Python implementation using scikit-learn. You can also follow a tutorial to replicate the SVM in R for hands-on practice.

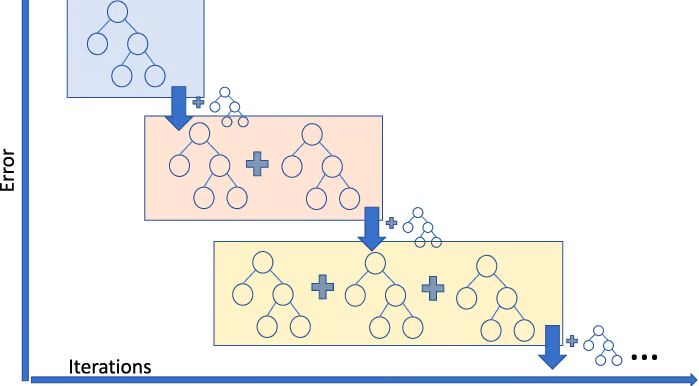

5. Gradient Boosting Regressor: Gradient Boosting Regression is an ensemble method that combines many weak models to create a strong predictor. It handles non-linear data well and deals effectively with multicollinearity.

Research Gate

If you run a ride-sharing service and want to predict fare prices, a gradient boosting regressor can help make accurate predictions. To learn about the different types of gradient boosting methods, you can find many helpful video tutorials online.

Key Takeaways:

- Machine learning helps computers learn patterns from data instead of following fixed rules, making them smarter and more adaptable.

- It’s a core part of AI and powers many everyday technologies, like spam filters, self-driving cars, and voice assistants.

- Popular algorithms include linear regression, decision trees, random forests, support vector machines, and gradient boosting — each suited to different tasks.

- Whether you want to predict prices, classify images, or understand customer behavior, machine learning offers powerful tools that keep improving.

In short, machine learning is the engine behind many AI breakthroughs and a key skill to explore if you want to understand or build smart systems.

Found this useful?

You might enjoy this as well

OpenAI Codex in 2026: From Code Generator to Agentic Development Command Center

From Code Generator to Agentic Development Command Center

February 10, 2026

The AI Architecture Guide(from scratch) for Non-Technical Leaders

How Modern AI Systems Actually Work in Production, and Why Your Success Depends on Everything Except the Model

February 9, 2026

Beyond Size: What the Chatbot Wars Reveal About Real AI Value in 2026

In this blog we will talk about how chatbots have a real value in 2026.

February 6, 2026