Mastering AI Reliability - A 2026 guide

AI isn’t unreliable because it’s “bad at thinking.”

It’s unreliable because we ask it to think without guardrails.

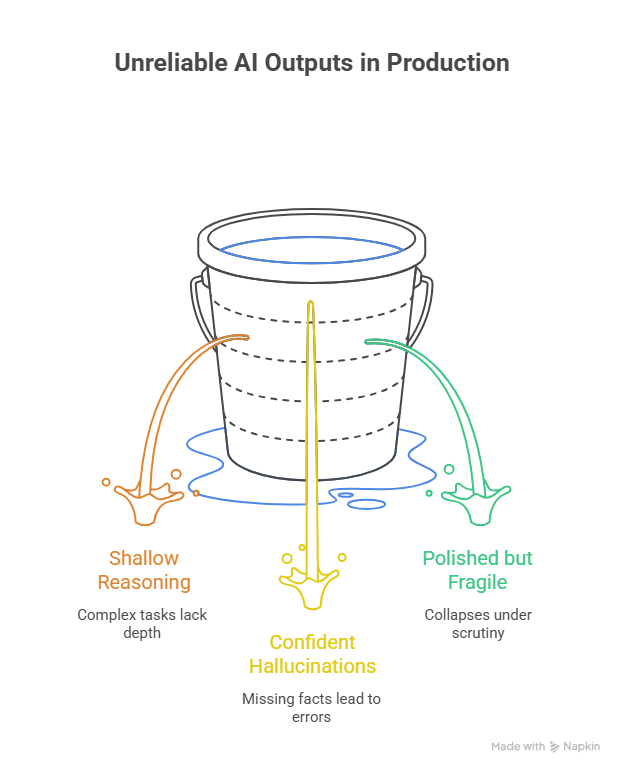

Most failures fall into three buckets:

- Shallow reasoning on complex tasks

- Confident hallucinations when facts are missing

- Outputs that look polished but collapse under scrutiny

This guide is about fixing those failures systematically, not stylistically.

Not by writing “better prompts.”

But by designing reliable reasoning workflows.

The Three Pillars of AI Reliability

Reliable AI systems, whether used by PMs, writers, analysts, or agents, rest on three pillars:

1.Reasoning Chains

Making intermediate thinking explicit where it helps, and constrained where it hurts.

2.Hallucination Debugging & Verification

Treating AI outputs as hypotheses that must survive checks.

3.Self-Critique & Iterative Refinement

Forcing the model to review its own work under structured criteria.

Each pillar solves a different failure mode. Together, they turn AI from a creative guesser into a dependable collaborator.

Pillar 1: Reasoning Chains

Making AI think in steps, without letting it ramble

What Reasoning Chains Actually Are

Reasoning chains (often referred to as “step-by-step reasoning”) are explicit intermediate steps between input and output.

They help when:

- The task is multi-step

- Tradeoffs matter

- The answer depends on assumptions

They hurt when:

- The task is trivial

- The chain becomes longer than the problem

- You outsource judgment instead of guiding it

The goal is clarity, not verbosity.

When Reasoning Chains Work Best

Use them for:

- Product prioritization

- Strategy tradeoffs

- Debugging logic

- Quantitative reasoning

- Complex explanations

Avoid them for:

- Simple rewrites

- Known facts

- Pure ideation

Core Reasoning Chain Techniques

1. Structured Step-Based Reasoning

You define what kind of thinking should happen, not every micro-step.

2. Few-Shot Reasoning

You show one good example of reasoning depth instead of explaining it.

3. Bounded Reasoning

You cap the number of steps to avoid overthinking.

Example 1: Product Prioritization (PM Use Case)

Weak Prompt

Prioritize these features: A, B, C.

Why it fails

- No criteria

- No constraints

- No accountability

Context-Engineered Reasoning Prompt

Prioritize the following features for a B2B SaaS roadmap.

Features:

A: User authentication improvements (high user impact, low effort)

B: Advanced analytics dashboard (high impact, high effort)

C: In-app chat support (medium impact, medium effort)

Reasoning structure:

1. State assumptions.

2. Choose a prioritization lens (e.g., impact vs effort).

3. Compare tradeoffs explicitly.

4. Rank features.

5. List risks or uncertainties.

Keep reasoning concise. Final output should be decision-ready.

Why this works

- Reasoning is guided, not micromanaged

- Tradeoffs are explicit

- Output can survive stakeholder questions

Example 2: Logical Reasoning (Classic Sanity Check)

Prompt

When I was 6, my sister was half my age. Now I’m 70. How old is she?

Explain step by step.

Reasoning

- At 6, sister = 3 (difference = 3 years)

- Age difference stays constant

- 70 − 3 = 67

Why this matters

This isn’t about the answer.

It’s about testing whether the model preserves invariants, a key reliability signal.

Common Reasoning Pitfalls

- Chains longer than the problem

- Repeating obvious steps

- Optimizing for “sounding smart”

- Treating reasoning text as truth instead of process

Rule of thumb:

If the reasoning is longer than your own thinking, it’s probably wasteful.

Pillar 2: Debugging Hallucinations

Turning confident guesses into verified answers

What Hallucinations Actually Are

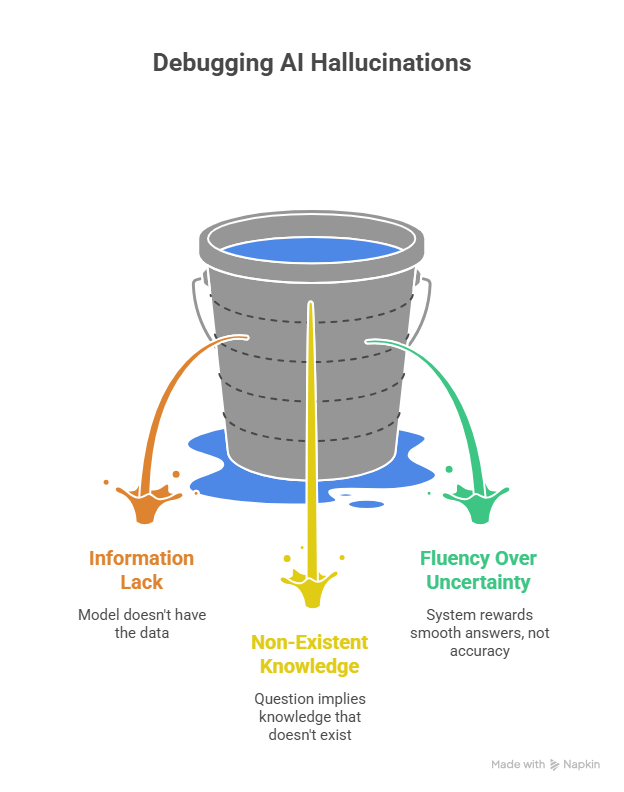

Hallucinations are not “random lies.”

They occur when:

- The model lacks information

- The question implies knowledge that doesn’t exist

- The system rewards fluency over uncertainty

The fix is verification design, not tone changes.

Core Anti-Hallucination Techniques

1. Chain-of-Verification (CoVe)

Force the model to:

- Generate claims

- Question each claim

- Confirm or reject

2. Grounding Constraints

Explicitly restrict what sources or context may be used.

3. Permission to Say “Unknown”

Models hallucinate more when uncertainty is punished.

Hallucination Example (Realistic)

Bad Prompt

When will GPT-5 be released?

Why this fails

- No confirmed public answer

- Model is pressured to guess

Verification-First Prompt

Answer the following only using confirmed public information.

Process:

1. List what is officially known.

2. Identify what is unknown.

3. If no confirmed date exists, say so clearly.

4. Do not speculate.

Question: Is there an official release date for GPT-5?

Safe Output

There is no officially confirmed release date. Public statements indicate ongoing development, but no timeline has been announced.

Why this works

- Removes incentive to fabricate

- Separates signal from noise

Precaution Prompt Sheet (Hallucination Control)

You can reuse these across workflows:

- “Base the answer only on provided context.”

- “If information is missing, say ‘Insufficient data.’”

- “List assumptions before conclusions.”

- “Separate facts from interpretations.”

- “Flag any uncertainty explicitly.”

- “Do not infer timelines without sources.”

- “Break the answer into verifiable claims.”

- “Reject the task if accuracy cannot be ensured.”

- “Cross-check internally for contradictions.”

- “If confidence < high, explain why.”

Pillar 3: Self-Critique & Iterative Refinement

Making AI review its own work like a senior peer

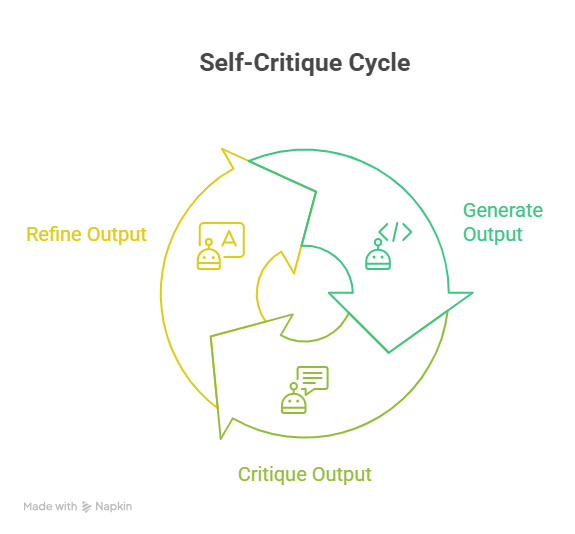

Self-critique works not because AI is “self-aware,” but because critique is a different cognitive task than generation.

The 3-Phase Self-Critique Loop

- Generate – Initial output

- Critique – Evaluate against explicit criteria

- Refine – Fix concrete issues

Limit to 2–3 iterations to avoid drift.

Example: Blog Draft Refinement

Phase 1 – Generate

Write a 300-word intro on AI product strategy.

Phase 2 – Critique Prompt

Critique the above draft on:

1. Clarity of argument

2. Unsupported claims

3. Audience relevance

4. Structure and flow

Score each from 1–10.

List specific fixes.

Phase 3 – Refine

Revise the draft addressing only the issues identified above.

Why this works

- Critique is scoped

- Revision is targeted

- Output improves without bloating

Common Self-Critique Mistakes

- Vague critique (“make it better”)

- Unlimited loops

- Letting critique introduce new goals

Critique should tighten, not expand

50 High-Impact Prompt Templates (Production-Ready)

A. Reasoning Chains (15)

- “Solve [problem] by stating assumptions → evaluating options → choosing tradeoffs → final decision.”

- “Analyze [decision] with constraints: time, cost, risk.”

- “Break this problem into no more than 5 reasoning steps.”

- “Compare options by impact vs reversibility.”

- “Explain reasoning as if defending to a skeptical stakeholder.”

- “List what must be true for this to work.”

- “Identify second-order effects.”

- “Highlight where judgment is required.”

- “Separate facts from opinions.”

- “Optimize for decision clarity, not completeness.”

- “Explain why this might fail.”

- “Rank options and justify elimination.”

- “Surface hidden assumptions.”

- “State what would change your recommendation.”

- “Summarize reasoning in 3 bullets.”

B. Hallucination Shields (15)

- “Use only the following sources/context.”

- “If unsure, respond with ‘unknown.’”

- “Do not infer missing facts.”

- “List claims and validate each.”

- “Reject the task if accuracy can’t be guaranteed.”

- “Flag speculative language.”

- “Distinguish known vs assumed.”

- “Time-bound all statements.”

- “Avoid future predictions.”

- “State confidence level.”

- “Explain evidence used.”

- “Cross-check internally.”

- “Highlight ambiguity.”

- “Ask for clarification before answering.”

- “Stop if data is insufficient.”

C. Self-Critique Loops (20)

- “Score output for clarity, accuracy, usefulness.”

- “Identify weakest paragraph.”

- “List 3 concrete improvements.”

- “Rewrite only unclear sections.”

- “Remove unsupported claims.”

- “Check for audience mismatch.”

- “Simplify without losing meaning.”

- “Flag bias or overconfidence.”

- “Improve structure only.”

- “Condense without deleting meaning.”

- “Evaluate decision quality.”

- “Check alignment with goal.”

- “Replace vague language.”

- “Ensure internal consistency.”

- “Test for misinterpretation.”

- “Improve scannability.”

- “Remove filler.”

- “Strengthen conclusion.”

- “Assess real-world usability.”

- “Final pass: would this survive scrutiny?”

The Reliability Workflow (Put This on a Wall)

- Reason deliberately

- Verify aggressively

- Critique narrowly

- Refine once or twice

This is how teams move from:

“AI is impressive but unreliable”

to

“AI is dependable under constraints.”

Final Thought

Reliable AI isn’t about smarter models.

It’s about better thinking, made explicit.

When you:

- Design reasoning

- Demand verification

- Enforce critique

AI stops guessing and starts collaborating.

Found this useful?

You might enjoy this as well

Context Engineering Is the Real Skill in 2026

Learn how context engineering aligns intent, constraints, and outcomes so AI behaves like a true collaborator, not a slot machine.

January 13, 2026

AI in Business: 12 Real-World Case Studies Across Retail, FinTech, HealthTech & More

12 Real-World Case Studies Across Retail, FinTech, HealthTech & More

January 7, 2026

How to Use AI to Create a Winning Product Strategy in 2026

Why the next generation of product leaders won’t just build with AI, they’ll think with it.

January 6, 2026