Context Engineering Is the Real Skill in 2026

Stop Prompting Like It’s 2022

For the last two years, people have obsessed over prompts.

The perfect phrasing.

The magic formula.

The belief that one clever paragraph could unlock super-intelligence.

That era is over.

Modern AI models don’t fail because your prompt isn’t clever enough.

They fail because you didn’t give them context.

The real skill is no longer prompt engineering.

It’s context engineering.

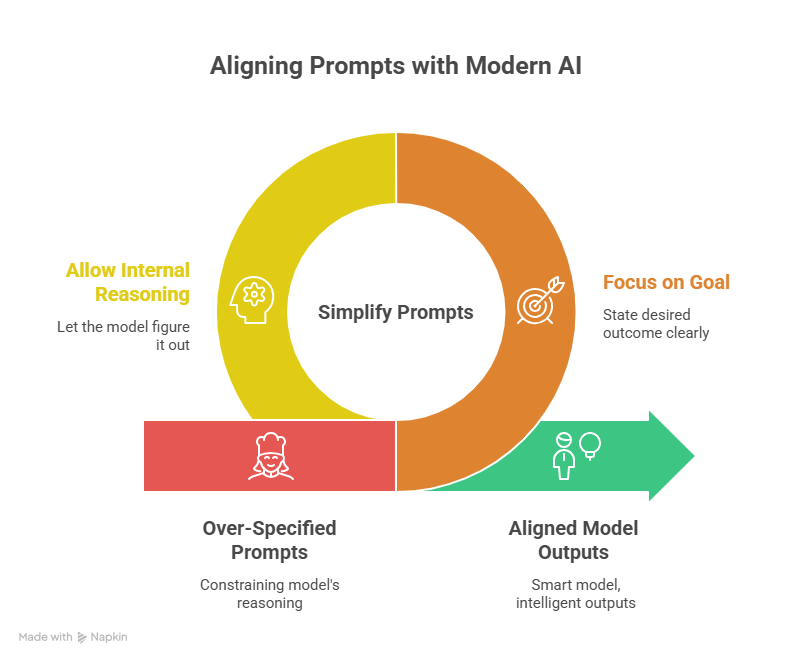

Once models became capable of internal reasoning, step-by-step micromanagement stopped being helpful and started becoming harmful. The bottleneck shifted from how you ask to what you’re actually trying to achieve.

This guide explains:

- Why prompts stopped working the way people expect

- How modern models actually interpret instructions

- A practical framework for context engineering

- 50 high-leverage, reusable examples you can apply immediately

This is not a prompt collection.

It’s a way of thinking.

Who This Guide Is For (and Not For)

This guide is for:

- Operators, founders, PMs, writers, strategists

- People using modern frontier models

- Anyone delegating real thinking to AI, not just generating text

This guide is not for:

- Prompt collectors

- One-shot “do this for me” usage

- People are looking for tricks instead of clarity

If you want AI to behave like a collaborator instead of a slot machine, keep reading.

Why “Good Prompts” Stopped Working

Early models needed hand-holding.

You had to spell everything out:

- Analyze this

- Then summarize that

- Then rewrite in this tone

So people learned to write prompts like recipes.

That made sense in 2022.

But modern models don’t behave like junior interns anymore.

They reason internally.

When you over-specify the process, you:

- Constrain the model’s reasoning

- Force it to follow your thinking, even when it could do better

- Get rigid, shallow outputs from very capable systems

This is why people often say:

“The model is smart, but the output feels dumb.”

The problem isn’t intelligence.

It’s misalignment.

Goal Over Process

Context engineering starts with a single shift:

Define the goal, not the process.

Instead of telling the model how to think, tell it what success looks like.

Modern models are trained to infer paths toward outcomes.

When you define the end state clearly, they reason backward automatically.

This mirrors how humans work:

- We define an outcome

- Infer steps dynamically

- Adjust along the way

AI can do the same if you let it.

Example

Weak instruction

“First analyze the data, then identify patterns, then write conclusions.”

Strong context

“I need an output that helps non-technical stakeholders understand our AI roadmap clearly, without overwhelming them.”

The second version communicates:

- Audience

- Outcome

- Success condition

- Implicit constraints

No micromanagement required.

Prompting vs Context Engineering

Think of it this way:

Prompting

Words → Output

Context Engineering

Intent → Reasoning → Output

Prompting focuses on phrasing.

Context engineering focuses on alignment.

That’s why context scales, and prompts don’t.

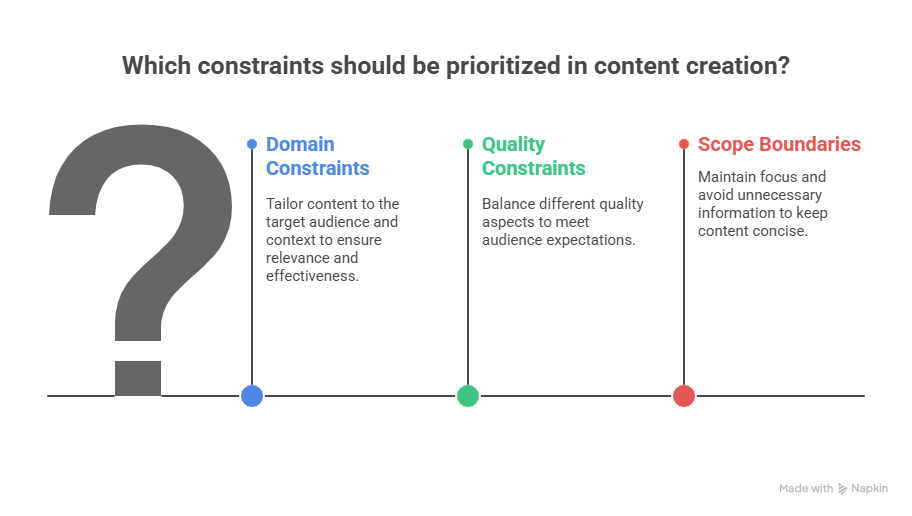

Constraints > Rules

Rules tell the model what to do.

Constraints define the boundaries within which it can reason.

Modern models respond far better to constraints.

What they need to know:

- What they’re optimizing for

- What matters most

- What would make the output actively bad

Three Constraints That Matter Most

1. Domain constraints

Who is this for?

- Executives vs engineers

- Beginners vs experts

- Regulated vs casual contexts

2. Quality constraints

What matters more?

- Brevity vs depth

- Practical vs theoretical

- Formal vs conversational

3. Scope boundaries

What should not be included?

- Avoid jargon

- Skip introductions

- No competitor comparisons

Don’t List Rules. Embed Constraints.

“Follow these rules: keep it simple, no jargon, be concise.”

“This should work for someone new to the topic, so prioritize clarity and practical examples over technical detail.”

This mirrors how humans explain intent, which is why models respond better to it.

Examples Teach Better Than Instructions

LLMs infer intent more effectively from examples than from abstractions.

If you care about:

- Format → show one

- Tone → quote a sentence

- Depth → contrast bad vs good

Instead of saying:

“Write at the right depth”

Say:

“Here’s what good depth looks like. Here’s what’s too shallow.”

This alone can cut iteration cycles in half.

Define Performance, Not Vibes

Vague instructions kill output quality.

“Be concise.”

“Explain clearly.”

“Make it accurate.”

These mean nothing to a model.

Define observable success instead.

“Be concise.”

“Each section should fit in a single paragraph so this works as a quick reference.”

“Explain clearly.”

“Someone should understand this without looking up definitions.”

“Make it accurate.”

“Facts should be verifiable within two minutes of searching.”

You’re not asking the model to guess your standards.

You’re giving it a target.

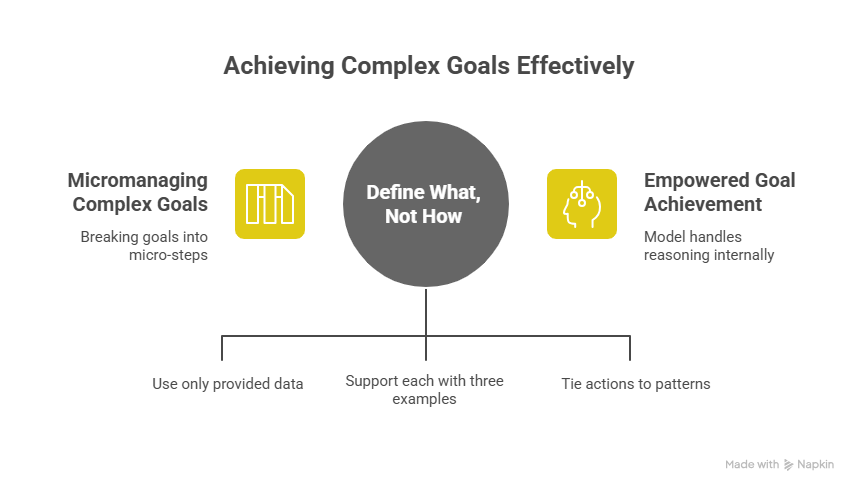

Handling Complex Goals Without Micromanagement

When goals are complex, don’t break them into micro-steps.

Define what must happen, not how.

Example:

“I need an analysis where:

- First, you assess the current state using only the provided data

- Then, identify patterns, each supported by at least three examples

- Finally, recommend actions tied directly to those patterns”

Clear stages.

Clear constraints.

Zero procedural control.

The model handles the reasoning internally.

The Universal Context Engineering Template

Use this structure everywhere:

Primary Goal

I need [output type] that accomplishes [X].

Context

This is for [audience/domain], where [what matters most].

Constraints

Focus on [priority]. Avoid [anti-priority].

Performance Criteria

Success means [observable outcome].

This would fail if [failure condition].

Outcome

After reading, the user should be able to [do X].

This scales from emails to strategy docs to product specs.

50 High-Leverage Context-Engineered Prompts

These are not “magic prompts.”

They are aligned instructions.

Copy them. Replace the brackets. Tighten the constraints.

A. Strategy & Decision-Making (1–10)

1.Strategic decision memo

I need a 2-page decision memo on whether we should adopt [AI tool] for [workflow].

This is for non-technical executives who care about risk, cost, and operational impact.

Focus on business trade-offs, timelines, and change management.

Avoid technical architecture details.

Success means an exec can restate the recommendation and top 3 risks from memory.

2.Build vs buy analysis

I need a comparison of building vs buying [capability].

Audience: product and engineering leadership.

Focus on long-term maintenance, opportunity cost, and speed.

Avoid feature checklists.

Outcome: a decision in one meeting.

3.Market entry assessment

I need an assessment of entering [market] in the next 12 months.

Audience: founders and operators.

Focus on demand signals, risks, and execution complexity.

Avoid market-sizing fluff.

Outcome: clear go / no-go.

4.Prioritization framework

I need a framework to decide what to build next.

Audience: PMs under delivery pressure.

Focus on clarity, not theory.

Avoid academic models.

Outcome: confident ranking.

5.Risk assessment

I need a risk analysis for launching [feature].

Audience: leadership.

Focus on operational, reputational, and adoption risks.

Avoid edge cases.

Outcome: mitigation priorities.

6.OKR critique

I need a critique of our current OKRs.

Audience: leadership.

Focus on clarity and measurability.

Avoid motivational language.

Outcome: actionable OKRs.

7.Go-to-market plan

I need a simple GTM plan for [product].

Audience: small execution team.

Focus on sequencing and ownership.

Avoid buzzwords.

Outcome: next-30-days clarity.

8.Competitive positioning

I need positioning guidance against [competitor].

Audience: sales and marketing.

Focus on differentiation customers care about.

Avoid feature matrices.

Outcome: one positioning statement.

9.Pricing decision support

I need help deciding pricing for [product].

Audience: founders.

Focus on trade-offs and perception.

Avoid pricing theory.

Outcome: defendable decision.

10.Board update synthesis

I need a concise board update from these notes.

Audience: board members.

Focus on progress, risks, and asks.

Avoid operational detail.

Outcome: shared understanding.

B. Writing & Communication (11–20)

11.LinkedIn post (1 story, 1 lesson)

I need a LinkedIn post telling one story about [experience] with one clear lesson.

Audience: non-technical professionals.

Avoid clichés and emojis.

Outcome: screenshot-worthy clarity.

12.Founder announcement

I need a founder announcement for [milestone].

Audience: customers and partners.

Focus on why it matters to them.

Avoid self-congratulation.

Outcome: confidence.

13.Internal announcement

I need an internal announcement explaining [change].

Audience: cross-functional team.

Focus on clarity and reassurance.

Avoid leadership jargon.

Outcome: fewer follow-ups.

14.Cold email rewrite

Rewrite this cold email for [ICP].

Focus on relevance and specificity.

Avoid hype and urgency tricks.

Outcome: readable in 10 seconds on mobile.

15.Product update email

I need a product update email for [feature].

Audience: existing users.

Focus on value, not functionality.

Avoid long explanations.

Outcome: understanding.

16.Blog introduction

I need an intro for a blog on [topic].

Audience: busy operators.

Focus on framing the problem fast.

Avoid history lessons.

Outcome: continued reading.

17.Executive summary

I need an executive summary of this document.

Audience: executives with 5 minutes.

Focus on decisions and implications.

Avoid detail.

Outcome: clarity without reading the full doc.

18.Customer apology

I need an apology message for [issue].

Audience: affected customers.

Focus on accountability and next steps.

Avoid defensiveness.

Outcome: restored trust.

19.Thought leadership article

I need a thought leadership piece on [topic].

Audience: senior professionals.

Focus on insight, not education.

Avoid beginner explanations.

Outcome: credibility.

20.Webinar description

I need a description for a webinar on [topic].

Audience: professionals considering attending.

Focus on takeaways.

Avoid vague promises.

Outcome: registration intent.

C. Product & UX (21–30)

21.Lean product spec

I need a 1-page spec for [feature].

Audience: designers and engineers.

Focus on problem, goal, and risks.

Avoid over-engineering.

Outcome: estimable in one meeting.

22.UX critique

I need a UX critique of this flow.

Audience: product team.

Focus on friction and clarity.

Avoid aesthetic preferences.

Outcome: actionable changes.

23.User story refinement

Refine these user stories.

Audience: engineers.

Focus on clarity and acceptance criteria.

Avoid edge cases.

Outcome: fewer questions.

24.Feature trade-offs

Help articulate trade-offs for [feature].

Audience: stakeholders.

Focus on what we’re not doing.

Avoid justification language.

Outcome: alignment.

25.Metrics definition

Define success metrics for [feature].

Audience: product leadership.

Focus on leading indicators.

Avoid vanity metrics.

Outcome: measurable success.

26.Experiment design

Design an experiment to test [hypothesis].

Audience: PMs.

Focus on speed and learning.

Avoid over-precision.

Outcome: clear decision criteria.

27.Roadmap narrative

Create a roadmap narrative from these initiatives.

Audience: leadership.

Focus on themes, not dates.

Avoid over-commitment.

Outcome: shared understanding.

28.Customer journey map

Create a simple journey map for [user].

Audience: cross-functional team.

Focus on friction points.

Avoid exhaustive detail.

Outcome: prioritization clarity.

29.Feature deprecation plan

Create a plan to deprecate [feature].

Audience: customers and internal teams.

Focus on communication and transition.

Avoid technical detail.

Outcome: smooth migration.

30.PRD simplification

Simplify this PRD.

Audience: busy engineers.

Focus on build-relevant decisions.

Avoid long background sections.

Outcome: faster execution.

D. Learning, Research & Personal Systems (31–50)

31.30-day learning plan

I need a 30-day plan to learn [skill].

Audience: time-poor professional.

Focus on application.

Outcome: usable competence.

32.Research synthesis

Synthesize these notes into insights.

Audience: decision-makers.

Focus on patterns and implications.

Avoid summaries.

Outcome: confident decisions.

33.Interview synthesis

Synthesize these interviews.

Audience: product team.

Focus on themes and quotes.

Avoid speculation.

Outcome: prioritization input.

34.Weekly operating system

Create a weekly system using AI.

Audience: solo founder.

Focus on simplicity.

Avoid productivity clichés.

Outcome: execution clarity.

35.Reading summary

Create a practical summary of this article.

Audience: operators.

Focus on takeaways.

Outcome: immediate use.

36.Concept explanation

Explain [concept] to non-experts.

Audience: smart but unfamiliar readers.

Focus on intuition.

Avoid jargon.

Outcome: understanding without Googling.

37.Decision journal

Help write a decision journal entry.

Audience: myself.

Focus on assumptions and risks.

Avoid hindsight bias.

Outcome: future learning.

38.Meeting synthesis

Summarize this meeting.

Audience: absent stakeholders.

Focus on decisions and actions.

Avoid play-by-play notes.

Outcome: alignment.

39.Skill gap analysis

Identify skill gaps for [role].

Audience: manager.

Focus on next 6 months.

Avoid generic lists.

Outcome: targeted development.

40.Career narrative

Help articulate my career story.

Audience: hiring managers.

Focus on progression and impact.

Avoid buzzwords.

Outcome: clarity.

41.Case study

Create a case study from this project.

Audience: prospects.

Focus on problem → decision → result.

Avoid self-praise.

Outcome: credibility.

42.Objection handling

Help handle objections about [topic].

Audience: customers.

Focus on empathy.

Avoid defensiveness.

Outcome: reduced friction.

43.Workshop outline

Create a workshop outline on [topic].

Audience: professionals.

Focus on learning outcomes.

Avoid filler activities.

Outcome: value.

44.Content audit

Audit my content.

Audience: me.

Focus on what to double down on.

Avoid generic advice.

Outcome: next actions.

45.Belief reframing

Help reframe this belief.

Audience: myself.

Focus on clarity.

Avoid platitudes.

Outcome: better decisions.

46.Decision explanation

Explain a decision to my team.

Audience: cross-functional team.

Focus on reasoning and trade-offs.

Avoid authority language.

Outcome: buy-in.

47.Learning reflection

Write a reflection on [experience].

Audience: myself.

Focus on transferable lessons.

Avoid fluff.

Outcome: insight.

48.Tool evaluation

Evaluate [tool] for my workflow.

Audience: practitioner.

Focus on fit, not features.

Avoid vendor marketing.

Outcome: clear decision.

49.Time audit

Analyze how I spend my time.

Audience: myself.

Focus on leverage and waste.

Avoid guilt framing.

Outcome: prioritization.

50.Long-term strategy narrative

Create a narrative for our long-term strategy.

Audience: team and stakeholders.

Focus on direction and principles.

Avoid detailed plans.

Outcome: alignment.

Final Thought

You are not searching for magic words.

You are telling the model:

- What you’re trying to achieve

- For whom

- Inside which boundaries

- And how you’ll judge success

That’s not prompting.

That’s leadership, translated for AI.

Once you master context engineering, AI stops feeling unpredictable

and starts feeling like a collaborator that actually understands intent.

Because the future of AI work isn’t better prompts.

It’s better thinking.

Found this useful?

You might enjoy this as well

AI in Business: 12 Real-World Case Studies Across Retail, FinTech, HealthTech & More

12 Real-World Case Studies Across Retail, FinTech, HealthTech & More

January 7, 2026

How to Use AI to Create a Winning Product Strategy in 2026

Why the next generation of product leaders won’t just build with AI, they’ll think with it.

January 6, 2026

Co-Pilots Everywhere: How Product Managers Will Work in Multi-Agent Systems

How multi-agent AI co-pilots will change product management in 2026 and why PMs must evolve from executors to orchestrators.

January 5, 2026