50 Context-Driven Questions, Real Answers, and the Judgment Behind Them

Product Sense in the Age of AI

Product sense has always been hard to define.

Not because it’s vague, but because it’s situational.

Great product decisions are rarely about frameworks, feature lists, or confident delivery. They’re about judgment under uncertainty:

- Incomplete data

- Conflicting incentives

- Real constraints

- Irreversible consequences

AI has made this gap even more visible.

Today, anyone can generate ideas, specs, or roadmaps.

What still differentiates strong PMs is how they think when things are unclear, especially when autonomy, automation, and scale amplify mistakes.

This guide brings together:

- Core product sense questions

- Context-driven answers (how real PMs reason)

- Modern realities like AI, automation, and agentic systems

Not interview theater.

Not hype.

Just how good product decisions actually get made.

Part 1: What Product Sense Really Is (And What It Isn’t)

Product sense is not:

- Having the “best idea”

- Memorizing frameworks

- Speaking confidently about vague strategy

Product sense is:

The ability to make sound decisions when clarity is missing, tradeoffs are real, and the cost of being wrong matters.

This becomes painfully obvious in production environments, especially with AI-powered systems, where:

- Ambiguous goals compound

- Weak constraints explode

- Missing observability hides failure

- Autonomy magnifies every mistake

The questions below test whether someone understands that reality.

Part 2: Understanding Users & Problems (The Foundation)

1. How do you decide what problem to solve?

Strong answer:

We prioritize problems based on pain, frequency, and leverage, not excitement.

We ask:

- Who experiences this repeatedly?

- What cost does this impose (time, money, risk)?

- What happens if we don’t solve it?

If the answer is “not much,” it’s not a priority.

2. Users are asking for a feature. What do you do?

Strong answer:

We treat feature requests as problem signals, not instructions.

We dig into:

- What job are they trying to accomplish?

- What workaround are they using today?

- Is this a core user or an edge case?

We validate the need, not the shape of the solution.

3. How do you validate that a problem actually exists?

Strong answer:

By looking for behavior, not opinions.

Signals include:

- Drop-offs

- Repeated workarounds

- Support tickets

- Manual processes outside the product

If users say it’s painful but don’t change behaviour, something doesn’t add up.

4. How do you identify unmet user needs?

Strong answer:

Unmet needs show up where users:

- Leave the product to finish tasks

- Make repeated errors

- Build parallel systems (spreadsheets, scripts)

Friction is more honest than feedback.

5. How do you prioritize user segments?

Strong answer:

Not by volume.

We prioritise segments based on:

- Retention influence

- Revenue impact

- Strategic importance

Often, power users matter more than the median user.

.png)

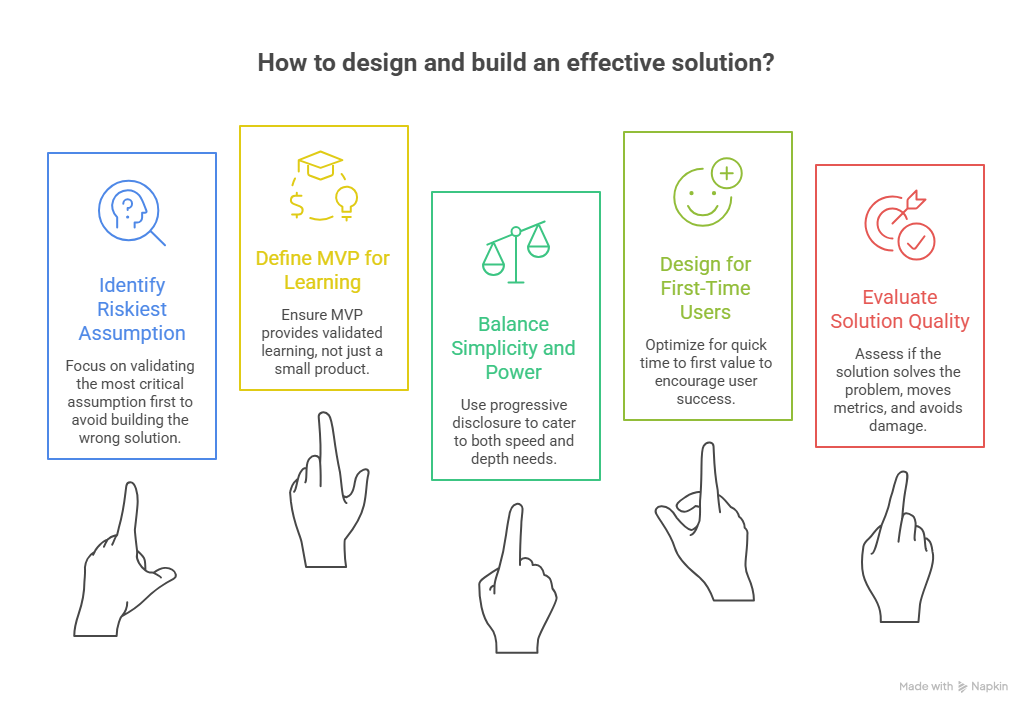

Part 3: Solution Thinking (Avoiding Expensive Mistakes)

6. How do you avoid building the wrong solution?

Strong answer:

By identifying the riskiest assumption first.

We ask:

“What must be true for this to work?”

Then we design the smallest test to validate that, not the full solution.

7. What does MVP mean to you?

Strong answer:

MVP is not the smallest product.

It’s the fastest path to validated learning.

If it doesn’t answer a critical question, it’s not an MVP; it’s just an early scope.

8. How do you balance simplicity vs. power?

Strong answer:

Through progressive disclosure.

Most users need speed.

Some need depth.

We design for both without overwhelming either.

9. How do you design for first-time users?

Strong answer:

We optimise for time to first value, not onboarding screens.

If users succeed quickly, they forgive confusion.

If they don’t, no tutorial saves them.

10. How do you know a solution is good?

Strong answer:

When it:

- Solves the original problem

- Moves the right metric

- Doesn’t create downstream damage

Shipping was not successful. Outcomes are.

Part 4: Metrics, Data & Reality

11. What metric do you track first?

Strong answer:

A metric that reflects user value delivered, not activity.

Usage without value is noise.

12. How do you choose a North Star metric?

Strong answer:

It must:

- Represent user success

- Predict retention

- Align with long-term revenue

If it’s easy to game, it’s the wrong metric.

13. Leading vs. lagging metrics, how do you use them?

Strong answer:

Leading metrics guide decisions.

Lagging metrics confirm outcomes.

Confusing the two leads to false confidence.

14. When should you not A/B test?

Strong answer:

When:

- Risk is high

- Learning is qualitative

- You already know the answer

Not everything benefits from experimentation.

15. How do you evaluate experiment results?

Strong answer:

Beyond averages.

We look at:

- Segment behavior

- Long-term effects

- Second-order consequences

Part 5: Strategy, Tradeoffs & Constraints

16. Build vs. buy, how do you decide?

Strong answer:

If it’s core to differentiation, we build.

If it’s a commodity, we buy.

Speed matters, but ownership does too.

17. How do you think about competitors?

Strong answer:

We study alternatives, not just competitors.

Often the biggest competitor is:

- Doing nothing

- Manual work

- Existing habits

18. How do you handle technical constraints?

Strong answer:

By trading scope, speed, and quality consciously, not pretending constraints don’t exist.

19. How do you prioritize a roadmap?

Strong answer:

Based on:

- Impact

- Confidence

- Strategic alignment

And we revisit it as learning changes.

20. How do you deal with ambiguity?

Strong answer:

We break ambiguity into assumptions and test the riskiest one first.

Part 6: Execution, Teams & Trust

21. How do you work with engineers?

Strong answer:

By bringing clarity, not control.

PMs own why and what.

Engineers own how.

22. How do you say no to stakeholders?

Strong answer:

By tying decisions to shared goals and tradeoffs, not authority.

23. What do you do when timelines slip?

Strong answer:

We analyze system failures, not individual blame.

24. How do you build trust?

Strong answer:

Through consistency, follow-through, and transparency, not charisma.

25. What makes a great PM?

Strong answer:

Someone who repeatedly turns:

- Ambiguity into clarity

- Ideas into outcomes

Teams into alignment

Part 7: Product Sense in the AI & Agentic Era

26. When should you use AI?

Strong answer:

When variability adds value.

If the task must be deterministic, AI is often the wrong tool.

27. What risks does AI introduce?

Strong answer:

Unpredictability, cost volatility, trust erosion.

Strong PMs design guardrails early.

28. When are agentic systems appropriate?

Strong answer:

When:

- Tasks are long-running

- Human attention doesn’t scale

- Errors are reversible

Autonomy is a cost, not a free upgrade.

29. When are agents the wrong solution?

Strong answer:

When:

- Errors are unacceptable

- Actions are irreversible

- Cost predictability matters more than flexibility

30. How do you build trust in AI systems?

Strong answer:

With:

- Observability

- Reversibility

- Clear failure handling

- Human control of irreversible actions

Part 8: Judgment Under Pressure

31. What do you do when data conflicts with intuition?

Strong answer:

We investigate both.

Data explains what.

Intuition questions why.

32. How do you handle conflicting feedback?

Strong answer:

We weigh feedback by user segment, frequency, and impact, not volume.

33. How do you manage scope creep?

Strong answer:

By protecting the problem statement, not the solution.

34. How do you know when to pivot?

Strong answer:

When evidence consistently invalidates a core assumption.

35. How do you handle failure?

Strong answer:

By extracting learning and fixing systems, not hiding mistakes.

Part 9: Product Maturity

36. How does product sense change with seniority?

Strong answer:

Senior PMs think in systems and second-order effects, not features.

37. How do you sunset features?

Strong answer:

With data, communication, and migration paths, never surprises.

38. How do you maintain quality at scale?

Strong answer:

Through standards, ownership, and feedback loops.

39. How do you onboard new team members?

Strong answer:

By teaching the why before the what.

40. How do you measure success post-launch?

Strong answer:

Against the original problem statement, not vanity metrics.

Part 10: Meta Product Thinking

41. What’s the biggest product mistake teams make?

Strong answer:

Shipping solutions before deeply understanding the problem.

42. What distinguishes great PMs from good ones?

Strong answer:

Judgment under uncertainty.

43. How do you decide what not to build?

Strong answer:

By understanding opportunity cost.

Every “yes” creates multiple “no’s.”

44. How do you keep improving product sense?

Strong answer:

By reflecting on decisions, not just outcomes.

45. What defines product sense in one sentence?

Strong answer:

Product sense is the ability to make high-quality decisions when information is incomplete and tradeoffs are unavoidable.

Final Takeaway

AI has made execution cheaper.

Autonomy has made mistakes louder.

What still matters, more than ever, is judgment.

The PMs who stand out are not the ones with the best ideas, but the ones who:

- Ask better questions

- Respect constraints

- Design for failure

- And know when not to automate

That’s product sense.

And it’s why it’s so hard to fake.

Found this useful?

You might enjoy this as well

AI Course for Professionals in India: Learn Skills That Actually Matter

AI course for professionals in India focused on practical AI skills, decision-making, and career growth, no coding required, built for working professionals.

January 20, 2026

Mastering AI Reliability - A 2026 guide

How serious teams turn unreliable AI outputs into production-ready work

January 19, 2026

Context Engineering Is the Real Skill in 2026

Learn how context engineering aligns intent, constraints, and outcomes so AI behaves like a true collaborator, not a slot machine.

January 13, 2026