Why AI Teams Ship Code Fast but Products Slow

You’ve probably seen this happen already.

Your engineering team just built an AI feature in three days.

A prototype that looks polished, functional, impressive.

But then… nothing ships.

Weeks pass.

Stakeholders ask for updates.

Leadership loses patience.

Your Notion roadmap starts looking like a graveyard of “Almost done” features.

The irony?

Everyone thought AI coding tools would make you faster.

Instead…

AI made your engineering fast - and your product slow.

And PMs who don’t understand why will spend 2026 drowning in delays, drift regressions, and late-night fire drills.

Let’s break down the uncomfortable truth:

AI development velocity has improved 5×, but AI release velocity has barely changed.

Why?

Because the bottleneck moved.

Not to engineering.

Not to design.

Not even to infra.

The bottleneck moved to testing - and PMs who don’t adapt will derail entire AI roadmaps.

The Moment the Industry Woke Up

A Series B SaaS company shared this with me:

- Traditional web feature build: 15 minutes

- AI feature build: 4 hours

- Same team. Same repo. Same pipeline.

Their CTO said:

“We can write AI features faster than we can validate them.

The slowest part of our org is no longer engineering - it’s product.”

That one line captures the new reality.

Why This Is Happening: AI Doesn’t Behave Like Software - It Behaves Like a Human

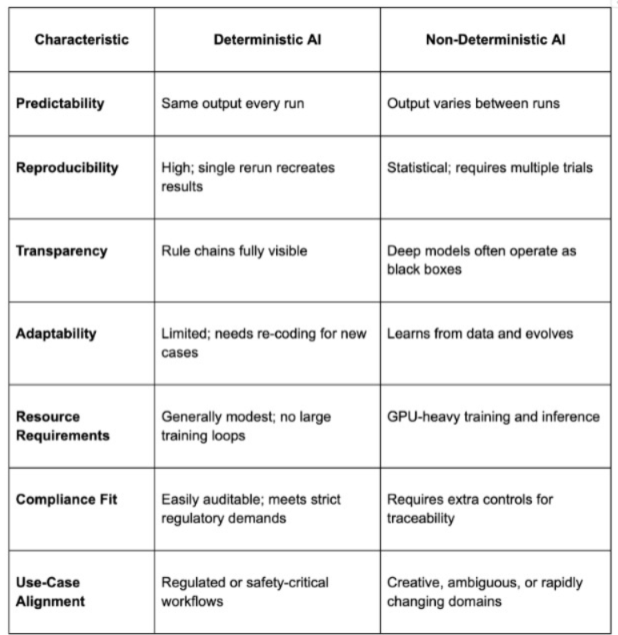

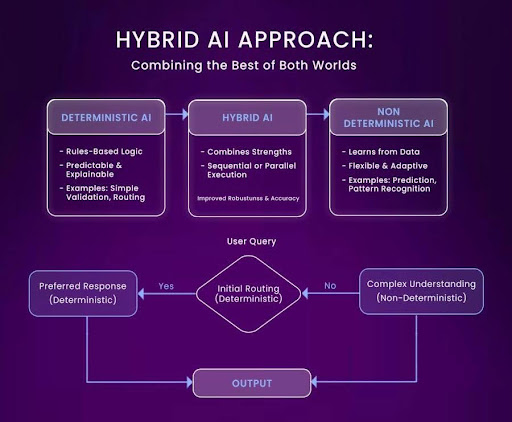

Traditional code is deterministic.

AI code is behavioral.

That one shift breaks every standard QA workflow.

Let’s go deeper.

The 4 Reasons AI Products Ship Slowly Even When Code Ships Fast

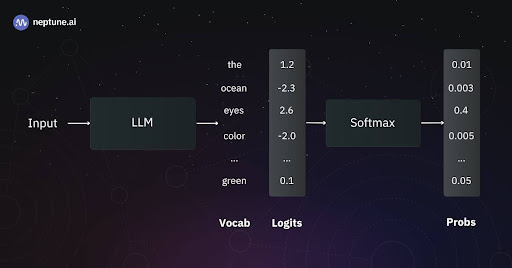

1. AI Is Non-Deterministic - Your Tests Don’t Catch Real Failures

Same input, different output.

Imagine your support chatbot works perfectly today.

Tomorrow, it decides to phrase something differently

and suddenly your UI truncates the last sentence.

No tests fail.

But the product fails.

2. AI Features Break Systems You Never Expected

A small hallucination in an AI recommendation engine can:

- Suggest out-of-stock items

- Trigger wrong fulfillment workflows

- Cause billing inconsistencies

- Increase customer support load

- Lower trust > lower conversions > lower revenue

AI failures cascade.

They’re not local.

They’re systemic.

3. QA Teams Don’t Know How to Test Behaviors

Traditional QA tests logic.

AI QA tests judgment.

That’s a different world.

QA teams now need to evaluate:

- reasoning quality

- chain-of-thought stability

- hallucination likelihood

- refusal patterns

- adherence to constraints

- multi-turn consistency

- bias and safety violations

Most QA teams have never tested a “behavior” before.

4. AI Requires Thousands of Scenarios, Not Hundreds

Your old regression suite had maybe 500 tests.

Your AI feature needs 10,000–50,000.

Why?

Because LLMs behave differently across:

- prompt variations

- user accents

- domain contexts

- input lengths

- model temperature

- model updates

- traffic load

Your existing test pipeline wasn’t built for this scale.

And that’s why everything slows down.

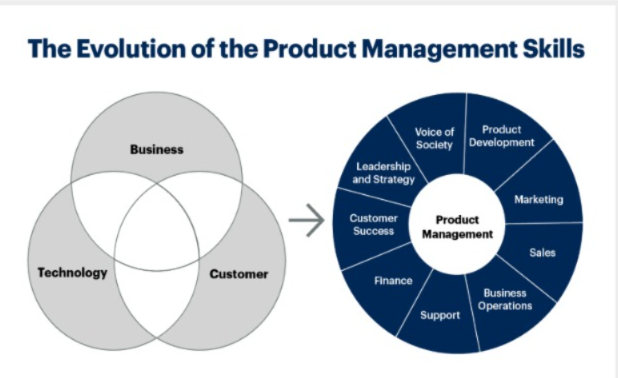

The Core Shift: PMs Must Become “Behavior Architects"

A year ago, PMs talked about prompt engineering.

Now? That’s entry-level.

The top PMs have evolved into AI behavior designers, responsible for:

- specification of expected behaviors

- defining eval metrics

- designing rubrics for correctness

- identifying drift

- setting guardrails

- creating thousands of synthetic scenarios

- running LLM judges

- interpreting eval reports

- enforcing quality thresholds

And this is the PM’s job - not engineering’s.

Because PMs own quality, not code.

Why Traditional PM Workflows Break for AI

Traditional PM Workflow

-------------------------------------------

Define Requirements → Build → QA → Ship

AI PM Workflow

-------------------------------------------

Define Behaviors → Build → Evals → Drift Check →

Failure Scenarios → LLM Judges → Stress Tests → Ship →

Monitor → Re-evaluate → Re-test → Ship Again

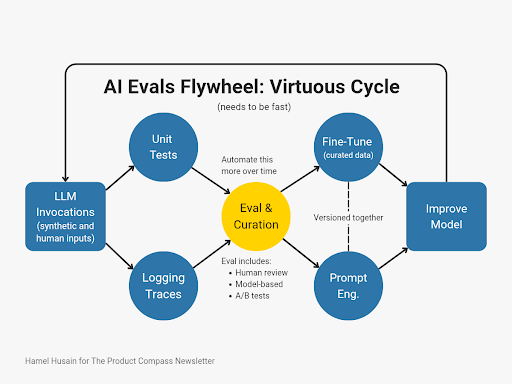

The New AI PM Loop: Build - Evaluate - Observe - Ship - Re-Evaluate

This is the loop elite AI PMs now run:

┌─────────┐

│ Build │ (Fast, AI-accelerated coding)

└────┬────┘

↓

┌──────────────┐

│ Evaluate │ (Evals, rubrics, 10k scenarios)

└────┬─────────┘

↓

┌──────────────┐

│ Observe │ (Telemetry, drift, model updates)

└────┬─────────┘

↓

┌─────────┐

│ Ship │

└────┬────┘

↓

┌──────────────┐

│ Re-Evaluate │ (Models change weekly)

└──────────────┘

Every part of this cycle adds time.

Which is why PMs who treat AI like normal software fall behind instantly.

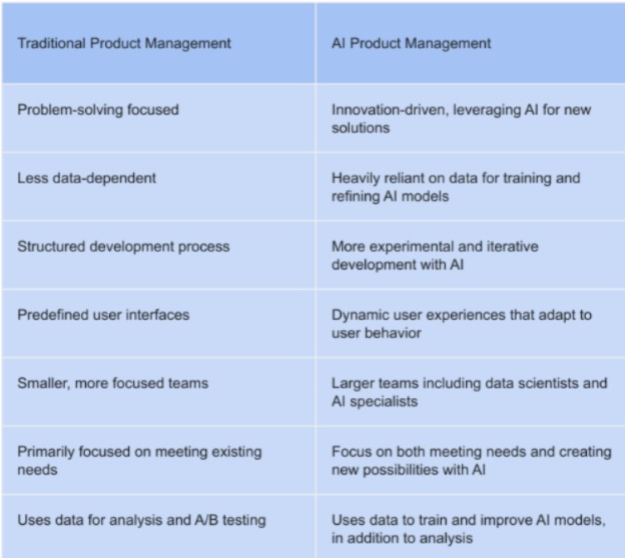

Comparison Table: Old PM vs AI PM

| Area | Traditional PM | AI PM | |

| Requirements | Features | Behaviors | |

| Testing | Pass/fail | Graded evals, rubrics, judges | |

| Failures | Bugs | Drift, hallucinations, variability | |

| Pipeline | Linear | Continuous loop | |

| Metrics | DAU, retention | Quality score, refusal %, drift rate | |

| Risk | Code bugs | AI unpredictability |

A Real Case Study

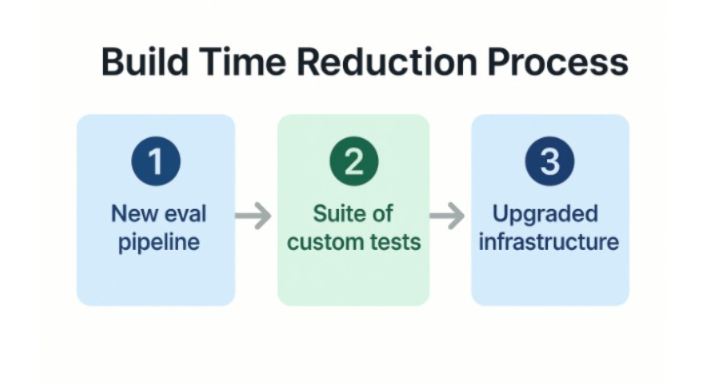

How a fintech team cut build time from 4 hours - 20 minutes

A high-growth fintech ran into a massive release slowdown:

- AI build took 4 hrs

- Release frequency dropped

- Regressions increased

- QA was drowning

They adopted an AI-first release workflow:

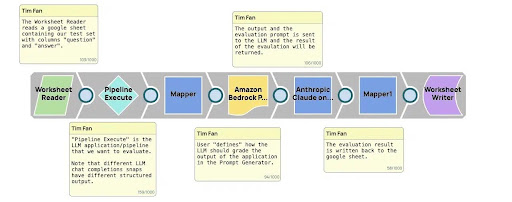

✔ Introduced LLM judges

Evaluated 10k+ outputs automatically.

✔ Added pre-launch drift tests

If the model changed behavior, the build was blocked.

✔ Version-to-version regression

Compared GPT-4.1 output vs GPT-4.1-mini outputs at scale.

✔ Added behavioral guardrails

“No speculation,” “No financial advice,” etc.

✔ PMs owned eval definitions

Not engineers.

The result?

| Metric | Before | After |

| Build Time | 4 hours | 20 minutes |

| Weekly Releases | 3 | 12 |

| Support Tickets | High | Dropped 37% |

| Regression Issues | Frequent | Near-zero |

This is what happens when PMs treat evals as a core product responsibility.

Final Takeaway

AI didn’t break engineering.

AI broke testing.

And the only role designed to fix that is Product Management.

Teams that ship fast in 2026 won’t be the ones with the best prompts.

They will be the teams with the best eval pipelines, behavioral tests, and PMs who treat AI as a living system - not a feature.

In the age of AI, PMs who master testing will outship everyone else.

Found this useful?

You might enjoy this as well

The 10 Essential Product Management KPIs Every PM Must Track in 2026

The 10 essential Product Management KPIs every PM must track in 2026, what to measure, why it matters, and how top PMs turn metrics into decisions.

December 15, 2025

The Top 12 ChatGPT Alternatives You Can Try

Discover the top 12 ChatGPT alternatives for 2025, comparing their features, strengths, and best use cases to help you choose the right AI for productivity, research, automation, and security.

December 11, 2025

Mastering Summarization with ChatGPT: Tips and Best Practices for 2025

A practical guide to AI evals and why they’re now the backbone of every reliable, scalable LLM product.

December 10, 2025